I spent the last week improving the performance of a few APIs at work and improved response Times by 5X.

I just did 3 basic things which improved performance in most of the API routes.

This blog is really helpful especially if you are new to Javascript so let's get right into the solution.

Do note that our REST APIs are built using Node.js+ typescript+mongoDB and this guide might use a few concepts that are applicable only to this.

Using Promise.all()

When reviewing old code, I could see something like this

const user = await getUser(userId);

const quizData = await getScore(activityId, userId);

const attemptData = await getAttemptData(activityId, userId);

This is just an example, not the actual code from work

Here is what's happening,

- We wait till the user data is fetched and then

- We wait for quizData to be fetched

- and then fetch the attemptData.

Now if you notice quizData is not dependant on user data and attemptData is not dependant on quizData, then why are we waiting until the previous promise is resolved?

We can concurrently fetch non-dependable values and literally 2X API performance.

Here is how you do it

const [user, quizData, attemptData] = await Promise.all([

getUser(userId),

getScore(activityId, userId),

getAttemptData(activityId, userId)

]);

Let's say your API was taking 700ms, this brings it down close to 300 - 350ms.

Reducing DB Calls

One of the other optimisations you can do is to reduce DB calls as much as possible. Let me explain

Let's say you have 5 userIds and you want to fetch user details for it.

Here is the bad way, You are getting user details one by one

const userIds = ['123', '345', '442', '567', '998'];

async function getUserDetails(userIds) {

const userDetails = [];

for (const userId of userIds) {

const userDetail = await fetchUserDetails(userId);

userDetails.push(userDetail);

}

return userDetails;

}

const users = await getUserDetails(userIds);

This code becomes slower and slower when the user list grows.

Here we are making 5 different Inter-service API/DB calls to get 5 different resources.

Ideally, you shouldn't do this.

Here is how you reduce this to just one DB call

You can just do this

async function getUserDetails(userIds) {

const userDetails = await fetchUserDetails(userIds);

return userDetails;

}

As you can see there is just one function call where we are awaiting and the fetchUserDetails(userIds) should get all resources in one DB call.

For example in MongoDB here is what the implementation of this function will look like

async function fetchUserDetails(userIds){

const userDetails = await User.find(userId: { $in: userIds });

return userDetails;

}

In mongoDB you can use $in operator which can query against an array of values.

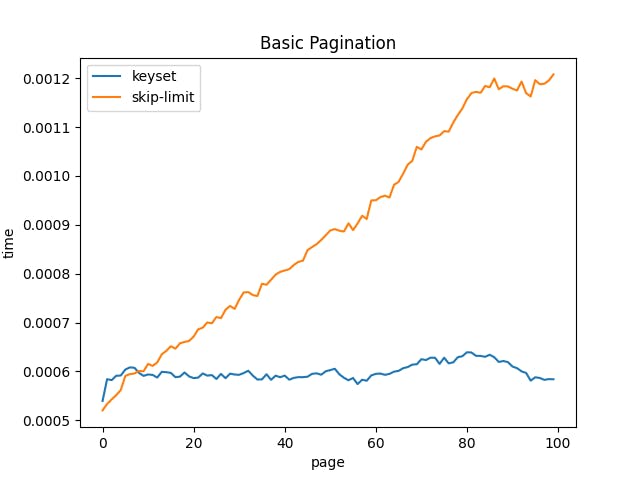

Avoiding cursor.skip in Pagination

You can skip this part if you don't use mongoDB.

Pagination is a concept used to divide the data into pages and send only required data one by one to save bandwidth and to have faster response times.

I still remember how my friend Shithij explained pagination in one line with an example of P**n Hub 3 years back. "The 1,2,3 button you use, till you get the right video"

We can implement Pagination in MongoDB using two methods called skip and limit

// Page 1

await User.find().limit(5)

// Page 2

await User.find().skip(5).limit(5)

// Page 3

await User.find().skip(10).limit(5)

cursor.limit is used to indicate the size of the document and cursor.skip is used to skip to the next set of documents.

To get Page 2, we say something like give me 5 documents after skipping 5 documents

But the problem with this in Mongo is that cursor.skip has to traverse through all the data until it finds the right one.

So Let's say there are 100K Records and you want the last page, mongo has to go through all documents from the first to skip through documents. There is no way around this if you are implementing Specific Page Pagination (Basic Pagination).

But for my project, we have a dashboard where a teacher can view all comments from students serially with Infinite Scrolling.

It's basically like viewing YouTube comment section

If you also want to implement something like this (serial pagination/ infinite scrolling) then you don't actually need cursor.skip

We can implement Key-Set Pagination and Optimise Speed

How does this work?

In Key-Set Pagination, we use the _id property which Mongo Stores to retrieve the next set of records. Something like

// Page 1

await User.find().limit(5)

// Page 2

last_id = ... # get last_id from the client

await User.find({'_id': {'$gt': last_id}}).limit(5)

// Page 3

last_id = ... # get last_id from the client

await User.find({'_id': {'$gt': last_id}}).limit(5)

The query to get Page 2 is something like "Get me 5 Documents whose _id is greater than last_id"

the reason why this works is that _id is stored in a sorted order based on timestamps. Here is a screenshot from the Mongo Doc

Basically, If you insert Two Documents into a Collection, The first Record's _id is less than the second _id

_id is indexed by default by MongoDB and even $gt and $lt comparisons can be done in O(1) [ Fast]

and your client can store the latest last_id and send it in the request body.

Extra Tip: If you want to get the latest documents while having serial pagination you can do something like this

// Page 1

await User.find().limit(5).sort({ _id: -1 })

// Page 2

last_id = ... # get last_id from the client

await User.find({'_id': {'$lt': last_id}}).sort({ _id: -1 }).limit(5)

// Page 3

last_id = ... # get last_id from the client

await User.find({'_id': {'$lt': last_id}}).sort({ _id: -1 }).limit(5)

Miscellaneous Tips

Keep all Related Data Together

As simple as it looks, this is sometimes missed. You need to design the schema based on how and what you want to query so that you can query all data in one go.

So Plan properly yo

Try Avoiding For Loop Inside For Loop

If you are using a for-loop inside a for-loop(nested loop), there is usually a way to reduce it to one for-loop.

For Example, Let's say you want to send user information with game score which comes from a different source. You might do something like this

function getUserDetailsWithScore() {

const userDetails = [

{ userId: '123', name: 'X' },

{ userId: '345', name: 'Y' },

];

const gameResults = [

{ userId: '123', score: 50 },

{ userId: '345', score: 20 },

];

for (const user of userDetails) {

for (const result of gameResults) {

if (result.userId === user.userId) {

user.score = result.score;

}

}

}

return userDetails;

}

console.log(getUserDetailsWithScore());

If you write something like this which has 2 for-loops or you use Array.prototype.find() inside the for-loop, this is a bad practice and your time complexity becomes O(n^2).

Here is how I would do it.

function getUserDetailsWithScore() {

const userDetails = [

{ userId: '123', name: 'X' },

{ userId: '345', name: 'Y' },

];

const gameResults = [

{ userId: '123', score: 50 },

{ userId: '345', score: 20 },

];

const userScoreMap = {};

gameResults.forEach((result) => {

userScoreMap[result.userId] = result.score;

});

for (const user of userDetails) {

user.score = userScoreMap[user.userId] ? userScoreMap[user.userId] : 0;

}

return userDetails;

}

const userDetails = getUserDetailsWithScore();

We can find one common value in both collections and then can keep that as a key and create an ObjectMap, so our Key, in this case, becomes userId and we store score like this userScoreMap[userId] = score.

Do you know how much faster the second approach is?

If you run the same code but with userDetails and gameResults having 100K records, the code with a nested loop takes 27 seconds to run and the latter one takes 18 milli seconds. You get the idea, Try to avoid nested loops

Conclusion

These are a few basic optimisations you can do in your project and improve response times and bandwidth.

I hope you learnt something new and if you did go ahead and click on all the reaction emojis hashnode gives you.

Also If you have some other tips, do put them in the comment sections-